A list of documents managed through the Web Site related to this project.

This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: Abstract

- 2: Introduction

- 3: Metadata Subgroup

- 4: Publications

- 5: Team

- 6: Surrogates

- 6.1: AutoPhaseNN: unsupervised physics-aware deep learning of 3D nanoscale Bragg coherent diffraction imaging

- 6.2: Calorimeter surrogates

- 6.3: Virtual tissue

- 6.4: Cosmoflow

- 6.5: Fully ionized plasma fluid model closures

- 6.6: Ions in nanoconfinement

- 6.7: miniWeatherML

- 6.8: OSMI

- 6.9: Particle dynamics

- 6.10: PtychoNN: deep learning network for ptychographic imaging that predicts sample amplitude and phase from diffraction data.

- 7: Software

- 8: Meeting Notes

- 8.1: Poster

- 8.2: Links

- 8.3: Meeting Notes 02-05-2024

- 8.4: Meeting Notes 01-08-2024

- 8.5: Meeting Notes 10-30-2023

- 8.6: Meeting Notes 09-25-2023

- 8.7: Meeting Notes 08-25-2023

- 8.8: Meeting Notes 07-31-2023

- 8.9: Meeting Notes 06-26-2023

- 8.10: Meeting Notes 05-29-2023

- 8.11: Meeting Notes 04-03-2023

- 8.12: Meeting Notes 02-27-2023

- 8.13: Meeting Notes 01-30-2023

- 8.14: Meeting Notes 01-05-2023

- 8.15: Meeting Notes 11-28-2022

- 8.16: Meeting Notes 10-31-2022

- 8.17: Meeting Notes 09-26-2022

- 8.18: Meeting Notes 08-15-2022

- 8.19: Meeting Notes 06-27-2022

- 8.20: Meeting Notes 05-23-2022

- 8.21: Meeting Notes 04-25-2022

- 8.22: Meeting Notes 03-19-2022

- 8.23: Meeting Notes 02-14-2022

- 8.24: Meeting Notes 01-10-2022

- 8.25: Meeting Notes 10-21-2021

- 8.26: Meeting Notes 09-27-2021

- 8.27: Meeting Notes 08-30-2021

- 8.28: Meeting Notes 07-26-2021

- 8.29: Meeting Notes 06-29-2021

- 8.30: Meeting Notes 05-24-2021

- 8.31: Meeting Notes 04-19-2021

- 8.32: Meeting Notes 03-23-2021

- 8.33: Meeting Notes 02-20-2021

- 8.34: Meeting Notes 01-20-2021

- 9: Contribution Guidelines

1 - Abstract

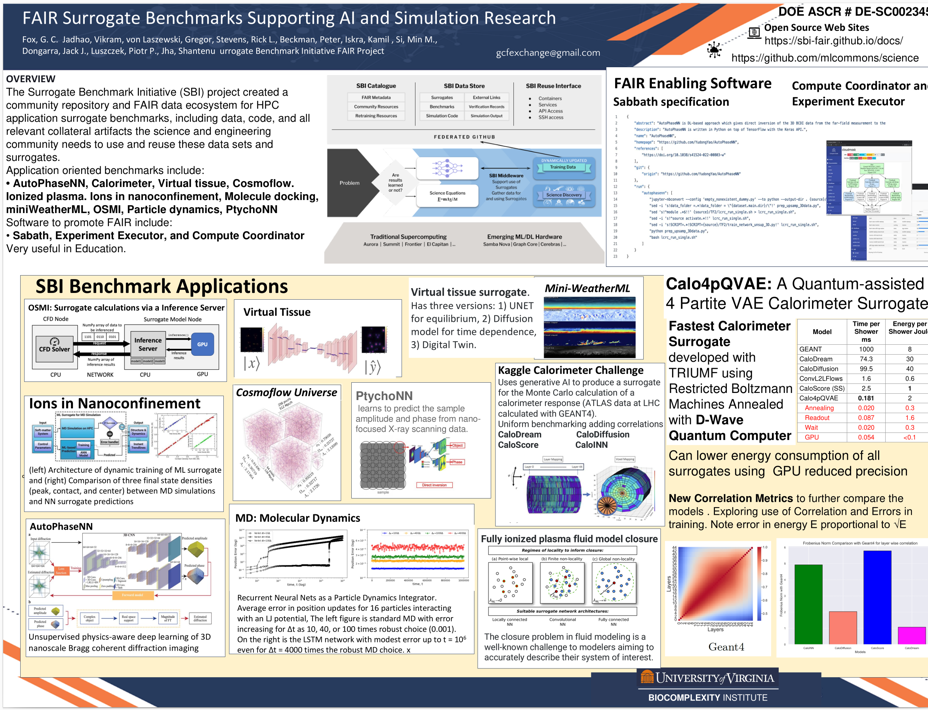

The Surrogate Benchmark Initiative (SBI) abstract as presented at the DOE ASCR Meeting, Feb 2024

Replacing traditional HPC computations with deep learning surrogates can dramatically improve the performance of simulations. We need to build repositories for AI models, datasets, and results that are easily used with FAIR metadata. These must cover a broad spectrum of use cases and system issues. The need for heterogeneous architectures means new software and performance issues. Further surrogate performance models are needed. The SBI (Surrogate Benchmark Initiative) collaboration between Argonne National Lab, Indiana University, Rutgers, University of Tennessee, and Virginia (lead) with MLCommons addresses these issues. The collaboration accumulates existing and generates new surrogates and hosts them (a total of around 20) in repositories. Selected surrogates become MLCommons benchmarks. The surrogates are managed by a FAIR metadata system, SABATH, developed by Tennessee and implemented for our repositories by Virginia.

The surrogate domains are Bragg coherent diffraction imaging, ptychographic imaging, Fully ionized plasma fluid model closures, molecular dynamics(2),

turbulence in computational fluid dynamics, cosmology, Kaggle calorimeter challenge(4), virtual tissue simulations(2), and performance tuning. Rutgers built a taxonomy using previous work and protein-ligand docking, which will be quantified using six mini-apps representing the system structure for different surrogate uses. Argonne has studied the data-loading and I/O structure for deep learning using inter-epoch and intra-batch reordering to improve data reuse. Their system addresses communication with the aggregation of small messages. They also study second-order optimizers using compression balancing accuracy and compression level. Virginia has used I/O parallelization to further improve performance. Indiana looked at ways of reducing the needed training set size for a given surrogate accuracy.1,2,3

Refernces

Web Page for Surrogate Benchmark Initiative SBI: FAIR Surrogate Benchmarks Supporting AI and Simulation Research. Web Page, January 2024. URL: https://sbi-fair.github.io/. ↩︎

Publications: https://sbi-fair.github.io/docs/publications/ ↩︎

Meeting summaries: https://docs.google.com/document/d/1cqMOkV9Cag6EB6HI6fR20gwhVwUeG5yijtJ3aEW0Crs/ ↩︎

2 - Introduction

The Surrogate Benchmark Initiative (SBI) project will create a community repository and FAIR data ecosystem for HPC application surrogate benchmarks, including data, code, and all relevant collateral artifacts the science and engineering community needs to use and reuse these data sets and surrogates.

Like nearly every field of science and engineering today, Computational Science using High Performance Computing (HPC) is being transformed by the ongoing revolution in Artificial Intelligence (AI), especially by the use of data-driven Deep Neural Network (DNN) techniques. In particular, DNN surrogate models 1 2 3, are being used to replace either part or all of traditional large-scale HPC simulations, achieving remarkable performance improvements (e.g., several orders of magnitude) in the process 4 5 6 7 8. Having been trained on data produced by actual runs of a given HPC simulation, such a surrogate can imitate, with high fidelity, part or all of that simulation, producing the same outcomes for a given set of inputs, but at far less cost in time and energy.

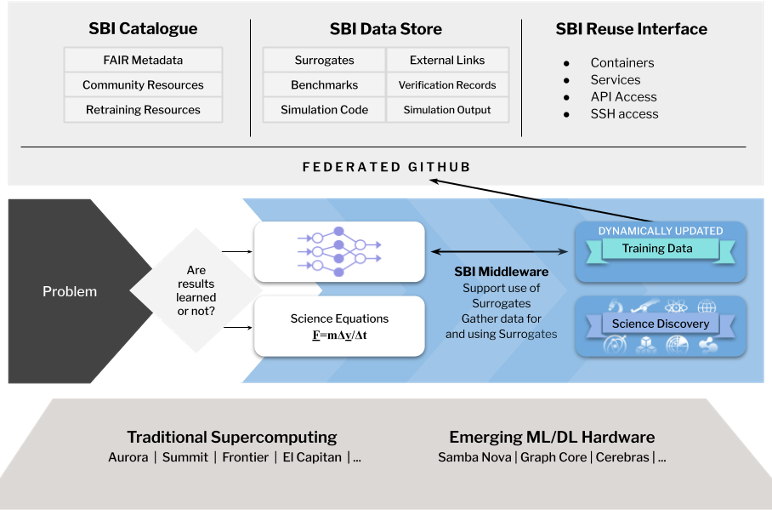

Figure 1. The Surrogate Benchmark Initiative (SBI)and its components

As a world leader in HPC for many decades, the Department of Energy will undoubtedly seek to exploit the power of such AI-driven surrogates, especially because of the end of Dennard scaling and Moore’s law. However, at present, there are no accepted benchmarks for such surrogates, and so no way to measure progress or inform the codesign of new HPC systems to support their use. The Surrogate Benchmark Initiative (SBI) project proposed below aims to address this fundamental problem by creating a community repository and FAIR data ecosystem for HPC application surrogate benchmarks, including data, code, and all relevant collateral artifacts the science and engineering community needs to use and reuse these data sets and surrogates.

To make “… scientific data publicly available to the AI community so that algorithms, tools, and techniques work for science,” we propose a community-driven, FAIR benchmarking activity that will 1) support AI research into different attractive approaches and 2) provide exemplars with reference implementations that will enable surrogates to be extended across a wide range of scientific fields, while encompassing the many different aspects of simulation where surrogates are useful. The key components of the project are depicted in Figure 1 above.

By collaborating with the major industry organization in this area - MLPerf and mirroring their process as much as possible, we will both increase the value of and obtain industry involvement in the SBI benchmarks. MLPerf has over 80 institutional members (mainly from industry) and strong existing involvement of the Department of Energy laboratories through the HPC working group inside MLPerf, which is now being extended with a science data working group. To ensure that FAIR principles are rigorously followed, we will initially set up data and model repositories outside MLPerf. Containers and service specifications such as OpenAPI will be systematically used. We will then explore how much can be usefully and FAIRly integrated with MLPerf, as our repositories have related but different goals and constraints from MLPerf. To learn how to effectively and efficiently set up FAIR repositories, we will start with (updates of) existing surrogates from team members.

Simultaneously, we will reach out to the community of experienced users building on our recent review 2 and recent papers 4, 9, 10. The outreach will use permanent SBI working groups with the Zoom/Meet/Teams/BlueJeans/Slack/cloud support that is now common and these will link to appropriate MLPerf groups. Online tutorials will be constructed based on the data and AI models that will support the broad understanding of the use and design of surrogates. These tutorials will also be designed so that they can help other stakeholders that need to understand the value of and requirements for surrogates; this includes the systems software/middleware and hardware architecture communities. The tutorials will be an early goal so we can reach out to domain scientists with important simulation codes but so far little or unsophisticated surrogate use.

A key aspect of SBI will be the development of an efficient generic surrogate architecture and accompanying middleware that will support the derivation and use of surrogates across many fields. Another specific activity will be the support of the use of benchmarks in the uncertainty quantification of the surrogate estimates. Thirdly there will be important studies of the amount of training data needed to get reliable surrogates for a given accuracy choice. We have already developed an effective performance model for surrogates but this needs extension as deeper uses of surrogates become understood and populated in our repositories.

We will link the repositories to important hardware systems including major DoE and NSF environments, commercial high-performance clouds, and available novel hardware. The study of the emerging AI systems space is an important goal of our project as our benchmarks stress both AI and simulation performance and so may not give the same conclusions as purely AI-focused benchmarks. Although we initially stress simulation surrogates, we will also consider AI surrogates for big data computations.

We intend that our repositories will generate active research from both the participants in our project and the broad community of AI and domain scientists. The FAIR ease of use, tutorials, and links to relevant execution platforms will be important. To initiate and foster strong virtual community support we will also use hackathons, Meetups, journal special issues, conference tutorials, and exhibits to nurture the outside use of our resources. As well as advancing research, which is our focus, we expect the project will be valuable for education and training. The project will explicitly fund staff to make sure that non-project users are properly supported and that our use of FAIR principles is effective.

Refernces

Geoffrey Fox, Shantenu Jha, “Understanding ML driven HPC: Applications and Infrastructure,” in IEEE eScience 2019 Conference, San Diego, California [Online]. Available: https://escience2019.sdsc.edu/ ↩︎

Geoffrey Fox, Shantenu Jha, “Learning Everywhere: A Taxonomy for the Integration of Machine Learning and Simulations,” in IEEE eScience 2019 Conference, San Diego, California [Online]. Available: https://arxiv.org/abs/1909.13340 ↩︎ ↩︎

Geoffrey Fox, James A. Glazier, JCS Kadupitiya, Vikram Jadhao, Minje Kim, Judy Qiu, James P. Sluka, Endre Somogyi, Madhav Marathe, Abhijin Adiga, Jiangzhuo Chen, Oliver Beckstein, and Shantenu Jha, “Learning Everywhere: Pervasive Machine Learning for Effective High-Performance Computation,” in HPDC Workshop at IPDPS 2019, Rio de Janeiro, 2019 [Online]. Available: https://arxiv.org/abs/1902.10810, http://dsc.soic.indiana.edu/publications/Learning_Everywhere_Summary.pdf ↩︎

M. F. Kasim, D. Watson-Parris, L. Deaconu, S. Oliver, P. Hatfield, D. H. Froula, G. Gregori, M. Jarvis, S. Khatiwala, J. Korenaga, J. Topp-Mugglestone, E. Viezzer, and S. M. Vinko, “Up to two billion times acceleration of scientific simulations with deep neural architecture search,” arXiv [stat.ML], 17-Jan-2020 [Online]. Available: http://arxiv.org/abs/2001.08055 ↩︎ ↩︎

JCS Kadupitiya , Geoffrey C. Fox , and Vikram Jadhao, “Machine learning for performance enhancement of molecular dynamics simulations,” in International Conference on Computational Science ICCS2019, Faro, Algarve, Portugal, 2019 [Online]. Available: http://dsc.soic.indiana.edu/publications/ICCS8.pdf ↩︎

A. Moradzadeh and N. R. Aluru, “Molecular Dynamics Properties without the Full Trajectory: A Denoising Autoencoder Network for Properties of Simple Liquids,” J. Phys. Chem. Lett., vol. 10, no. 24, pp. 7568–7576, Dec. 2019 [Online]. Available: http://dx.doi.org/10.1021/acs.jpclett.9b02820 ↩︎

Y. Sun, R. F. DeJaco, and J. I. Siepmann, “Deep neural network learning of complex binary sorption equilibria from molecular simulation data,” Chem. Sci., vol. 10, no. 16, pp. 4377–4388, Apr. 2019 [Online]. Available: http://dx.doi.org/10.1039/c8sc05340e ↩︎

F. Häse, I. Fdez Galván, A. Aspuru-Guzik, R. Lindh, and M. Vacher, “How machine learning can assist the interpretation of ab initio molecular dynamics simulations and conceptual understanding of chemistry,” Chem. Sci., vol. 10, no. 8, pp. 2298–2307, Feb. 2019 [Online]. Available: http://dx.doi.org/10.1039/c8sc04516j ↩︎

O. Obiols-Sales, A. Vishnu, N. Malaya, and A. Chandramowlishwaran, “CFDNet: a deep learning-based accelerator for fluid simulations,” arXiv [physics.flu-dyn]. 2020 [Online]. Available: http://arxiv.org/abs/2005.04485 ↩︎

J. A. Tallman, M. Osusky, N. Magina, and E. Sewall, “An Assessment of Machine Learning Techniques for Predicting Turbine Airfoil Component Temperatures, Using FEA Simulations for Training Data,” in ASME Turbo Expo 2019: Turbomachinery Technical Conference and Exposition, 2019 [Online]. Available: https://asmedigitalcollection.asme.org/GT/proceedings-abstract/GT2019/58646/V05AT20A002/1066873. [Accessed: 23-Feb-2020] ↩︎

3 - Metadata Subgroup

This subgroup is lead but University of Tennessee, Knoxville.

Schema Development

As part of the logging, reporting activities, this subgroup is tasked to create appropriate schema to follow the FAIR principles. Below is a general overview of the major hierarchy of data that needs to be recorded for reproducibility.

- Hardware specifications

- Compute: CPUs, Accelerators

- Memory: caches, NUMA

- Network: on-node CPU and accelerator coherency, NIC and off-node switches

- Peripherals

- Storage: primary (SSD), secondary (HDD), tertiary (RAID/remote)

- Firmware: ID/release date

- Software stack

- Compiler: GCC, Clang, vendor

- AI framework: TensorFlow, PyTorch, Keras, MxNet

- Tensor backend: JAX, TVM

- Runtime: JVM, OpenMP, CUDA

- Messaging API: MPI, NCCL, RCCL

- OS: Linux

- Container: Singularity, Docker, CharlieCloud

- Input data

- Data sets (version, size)

- Image: MNIST digits/fashion, CIFAR 10/100, ImageNet, VGG

- Language: Transformer

- Science: instrument, simulation

- Annotations

- Data sets (version, size)

- Model data

- Release date, ID, repo/branch/tag/hash, URL

- Output data

- Performance rate: training, inference

- Power draw: training, inference

- Energy consumption

- Convergence: epochs

- Accuracy, recall

4 - Publications

The collection of publications related to this project.

- Note: Please do not edit this page as it is automatically generated. To add new refernces please edit the bibtex file

[1] G. Fox, P. Beckman, S. Jha, P. Luszczek, and V. Jadhao, “Surrogate benchmark initiative SBI: FAIR surrogate benchmarks supporting AI and simulation research,” in ASCR computer science (CS) principal investigators (PI) meeting, Atlanta, GA: U.S. Department of Energy (DOE), Office of Science (SC), Feb. 2024, p. 1. Available: https://github.com/sbi-fair/sbi-fair.github.io/raw/main/pub/doe_abstract.pdf

[2] T. Zhong, J. Zhao, X. Guo, Q. Su, and G. Fox, “RINAS: Training with dataset shuffling can be general and fast.” 2023. Available: https://arxiv.org/abs/2312.02368

[3] C. Luo, T. Zhong, and G. Fox, “RTP: Rethinking tensor parallelism with memory deduplication.” 2023. Available: https://arxiv.org/abs/2311.01635

[4] “Quadri-partite quantum-assisted VAE as a calorimeter surrogate,” in Bulletin of the american physical society, in APS march meeting. American Physical Society Sites. Available: https://meetings.aps.org/Meeting/MAR24/Session/Y50.5

[5] J. Q. Toledo-Marín, G. Fox, J. P. Sluka, and J. A. Glazier, “Deep learning approaches to surrogates for solving the diffusion equation for mechanistic real-world simulations.” 2021. Available: https://arxiv.org/abs/2102.05527

[6] J. Q. Toledo-Marín, G. Fox, J. P. Sluka, and J. A. Glazier, “Deep learning approaches to surrogates for solving the diffusion equation for mechanistic real-world simulations,” Frontiers in Physiology, vol. 12, 2021, doi: 10.3389/fphys.2021.667828.

[7] J. Kadupitiya, F. Sun, G. Fox, and V. Jadhao, “Machine learning surrogates for molecular dynamics simulations of soft materials,” Journal of Computational Science, vol. 42, p. 101107, 2020, Available: https://par.nsf.gov/servlets/purl/10188151

[8] V. Jadhao and J. Kadupitiya, “Integrating machine learning with hpc-driven simulations for enhanced student learning,” in 2020 IEEE/ACM workshop on education for high-performance computing (EduHPC), IEEE, 2020, pp. 25–34. Available: https://api.semanticscholar.org/CorpusID:221376417

[9] A. Clyde et al., “Protein-ligand docking surrogate models: A SARS-CoV-2 benchmark for deep learning accelerated virtual screening.” 2021. Available: https://arxiv.org/abs/2106.07036

[10] E. A. Huerta et al., “FAIR for AI: An interdisciplinary and international community building perspective,” Scientific Data, vol. 10, no. 1, p. 487, 2023, Available: https://doi.org/10.1038/s41597-023-02298-6

[11] G. von Laszewski, J. P. Fleischer, and G. C. Fox, “Hybrid reusable computational analytics workflow management with cloudmesh.” 2022. Available: https://arxiv.org/abs/2210.16941

[12] V. Chennamsetti et al., “MLCommons cloud masking benchmark with early stopping.” 2023. Available: https://arxiv.org/abs/2401.08636

[13] G. von Laszewski and R. Gu, “An overview of MLCommons cloud mask benchmark: Related research and data.” 2023. Available: https://arxiv.org/abs/2312.04799

[14] G. von Laszewski et al., “Whitepaper on reusable hybrid and multi-cloud analytics service framework.” 2023. Available: https://arxiv.org/abs/2310.17013

[15] G. von Laszewski, J. P. Fleischer, G. C. Fox, J. Papay, S. Jackson, and J. Thiyagalingam, “Templated hybrid reusable computational analytics workflow management with cloudmesh, applied to the deep learning MLCommons cloudmask application,” in eScience’23, Limassol, Cyprus: Second Workshop on Reproducible Workflows, Data,; Security (ReWorDS 2022), 2023. Available: https://github.com/cyberaide/paper-cloudmesh-cc-ieee-5-pages/raw/main/vonLaszewski-cloudmesh-cc.pdf

[16] G. von Laszewski et al., “Opportunities for enhancing MLCommons efforts while leveraging insights from educational MLCommons earthquake benchmarks efforts,” Frontiers in High Performance Computing, vol. 1, no. 1233877, p. 31, 2023, Available: https://doi.org/10.3389/fhpcp.2023.1233877

[17] G. von Laszewski, “Cloudmesh.” Web Page, Jan. 2024. Available: https://github.com/orgs/cloudmesh/repositories

[18] G. von Laszewski, “Reusable hybrid and multi-cloud analytics service framework,” in 4th international conference on big data, IoT, and cloud computing (ICBICC 2022), Chengdu, China: IASED, 2022. Available: www.icbicc.org

5 - Team

- Geoffrey Fox, Indiana University (Principal Investigator)

- Vikram Jadhao, Indiana University (Co-Investigator)

- Gregor von Laszewski, Indiana University (Co-Investigator), laszewski@gmail.com, https://laszewski.github.io

- Rick Stevens, Argonne National Laboratory (Co-Investigator)

- Peter Beckman, Argonne National Laboratory (Co-Investigator)

- Kamil Iskra, Argonne National Laboratory (Co-Investigator)

- Min Si, Argonne National Laboratory (Co-Investigator)

- Jack Dongarra, University of Tennessee, Knoxville (Co-Investigator)

- Piotr Luszczek, University of Tennessee, Knoxville (Co-Investigator)

- Shantenu Jha, Rutgers University (Co-Investigator)

6 - Surrogates

A list of surrogates

6.1 - AutoPhaseNN: unsupervised physics-aware deep learning of 3D nanoscale Bragg coherent diffraction imaging

Metadata

Model autophasenn.json

Datasets autoPhaseNN_aicdi.json

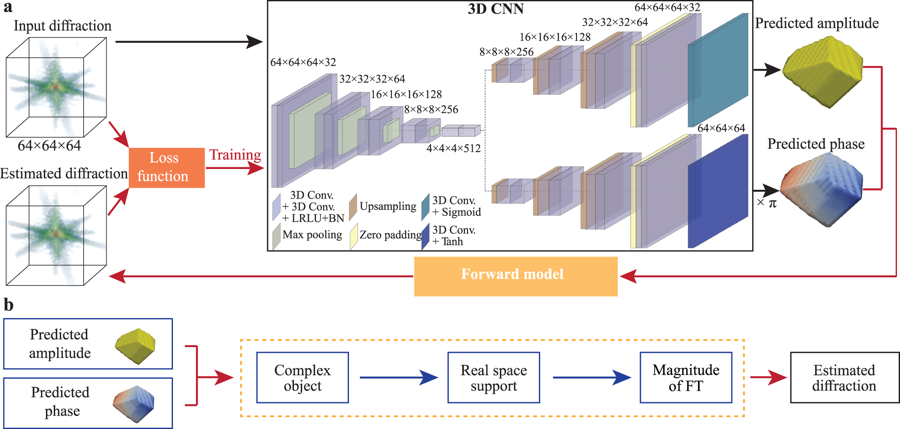

AutoPhaseNN 1, a physics-aware unsupervised deep convolutional neural network (CNN) that learns to solve the phase problem without ever being shown real space images of the sample amplitude or phase. By incorporating the physics of the X-ray scattering into the network design and training, AutoPhaseNN learns to predict both the amplitude and phase of the sample given the measured diffraction intensity alone. Additionally, unlike previous deep learning models, AutoPhaseNN does not need the ground truth images of sample’s amplitude and phase at any point, either in training or in deployment. Once trained, the physical model is discarded and only the CNN portion is needed which has learned the data inversion from reciprocal space to real space and is ~100 times faster than the iterative phase retrieval with comparable image quality. Furthermore, we show that by using AutoPhaseNN’s prediction as the learned prior to iterative phase retrieval, we can achieve consistently higher image quality, than neural network prediction alone, at 10 times faster speed than iterative phase retrieval alone.

Fig. 1: Schematic of the neural network structure of AutoPhaseNN model during training.

a) The model consists of a 3D CNN and the X-ray scattering forward model. The 3D

CNN is implemented with a convolutional auto-encoder and two deconvolutional

decoders using the convolutional, maximum pooling, upsampling and zero padding

layers. The physical knowledge is enforced via the Sigmoid and Tanh activation

functions in the final layers. b The X-ray scattering forward model includes the

numerical modeling of diffraction and the image shape constraints. It takes the

amplitude and phase from the 3D CNN output to form the complex image. Then the

estimated diffraction pattern is obtained from the FT of the current estimation

of the real space image.

Image from: Yao, Y. et al / CC-BY

References

Yao, Y., Chan, H., Sankaranarayanan, S. et al. AutoPhaseNN: unsupervised physics-aware deep learning of 3D nanoscale Bragg coherent diffraction imaging. npj Comput Mater 8, 124 (2022). https://doi.org/10.1038/s41524-022-00803-w ↩︎ ↩︎

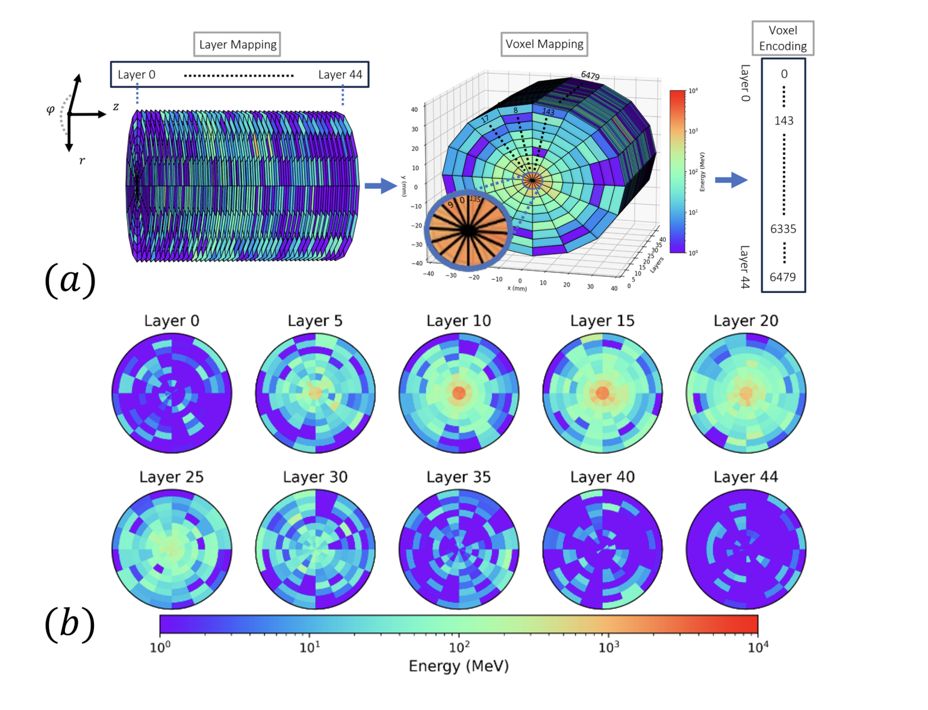

6.2 - Calorimeter surrogates

Overview

The Kaggle calorimeter challenge uses generative AI to produce a surrogate for the Monte Carlo calculation of a calorimeter response to an incident particle (ATLAS data at LHC calculated with GEANT4). Variational Auto Encoders, GANs, Normalizing Flows, and Diffusion Models. We also have a surrogate using a Quantum Computer (DWAVE) annealer to generate random samples. We have identified four different surrogates that are available openly from Kaggle and later submissions.

Figure 1: CaloChallenge Dataset.

Details

Accurate simulation plays a crucial role in particle physics by bridging theoretical models with experimental data to uncover the universe’s fundamental properties. At the Large Hadron Collider (LHC), simulations based on Monte Carlo methods model the interactions of billions of particles, including complex calorimeter shower events—cascades of secondary particles produced when high-energy particles hit detector materials. The widely-used Geant4 1 simulation toolkit provides highly detailed physics-based simulations, but its computational cost is extremely high, making up over 75% of the total simulation time 2. With the upcoming High-Luminosity LHC (HL-LHC) 3,4 upgrade in 2029, the collider will generate larger datasets with higher precision requirements, significantly increasing the demand for computational resources. To mitigate this, researchers are exploring generative models commonly used in image and text generation—as surrogate models that can generate realistic calorimeter showers at a fraction of the computational cost. In recent years, several approaches based on Generative Adversarial Networks(GAN) 5, 6, 7, 8, 9, 10, Diffusion 11 12, 13, 14, 15, 16, 17, 18, 19, Variational Autoencoders (VAEs) 20, 21, 22, 23, 24, 25, 26, 27, 28 and Normalizing Flows 29, 30, 31, 32, 33, 34, 35 have been proposed. However, evaluating these models remains challenging because the physical characteristics of calorimeter showers differ significantly from traditional image- and text-based data. 36, 37 conducted a rigorous evaluation of these generative models using standard datasets and a diverse set of metrics derived from physics, computer vision, and statistics. Although 36 sheds light on the existent correlations between layers, they do not quantify correlations between layers and voxels. In this work, we propose Correlation Frobenius Distance (CFD), an evaluation metric for generative models of calorimeter shower simulation. This metric measures how the consecutive layers and voxels of generated samples are correlated with each other compared to Geant4 samples. CFD helps evaluate the consistency of energy deposition patterns across layers, capturing the spatial correlations in the calorimeter shower. Lower CFD values indicate that the generated samples better preserve the correlations observed in Geant4 simulations. We compared four different models (CaloDream 19, CaloScore v2 18, CaloDiffusion 27, and CaloINN 33) on Dataset 2 38 from CaloChallenge 2022 13 for CFD, our observation reveals that CaloDream can capture correlations between consecutive layers and voxels the best. Furthermore, we explored the impact of using full versus mixed precision modes during inference for CaloDiffusion. Our observation shows that mixed precision inference does not speed up inference for Dataset 1 39 and Dataset 2 39. However, it significantly improves inference time for Dataset 3 39, without compromising performance. The Code is available in GitHub at 40.

Additional relevant references include:

Team contributed refernces include

References

Team contributed refernces are marked in bold

Agostinelli, Sea, et al. “GEANT4—a simulation toolkit.” Nuclear instruments and methods in physics research section A: Accelerators, Spectrometers, Detectors and Associated Equipment 506.3 (2003): 250-303. ↩︎

Muškinja, Miha, John Derek Chapman, and Heather Gray. “Geant4 performance optimization in the ATLAS experiment.” EPJ Web of Conferences. Vol. 245. EDP Sciences, 2020. ↩︎

“New Schedule for CERN’s Accelerators.” CERN, 5 Dec. 2023, [https://home.cern/news/news/accelerators/new-schedule-cerns-accelerators]:(https://home.cern/news/news/accelerators/new-schedule-cerns-accelerators). Accessed 28 Feb. 2025. ↩︎

“Computing at CERN.” CERN, https://home.web.cern.ch/science/computing. Accessed 28 Feb. 2025. ↩︎

ATLAS collaboration. “Fast simulation of the ATLAS calorimeter system with Generative Adversarial Networks.” ATLAS PUB Note, CERN, Geneva (2020). ↩︎

Ghosh, Aishik, and ATLAS collaboration. “Deep generative models for fast shower simulation in ATLAS.” Journal of Physics: Conference Series. Vol. 1525. No. 1. IOP Publishing, 2020. ↩︎

Giannelli, Michele Faucci, and Rui Zhang. “CaloShowerGAN, a generative adversarial network model for fast calorimeter shower simulation.” The European Physical Journal Plus 139.7 (2024): 597. ↩︎

Paganini, Michela, Luke de Oliveira, and Benjamin Nachman. “Accelerating science with generative adversarial networks: an application to 3D particle showers in multilayer calorimeters.” Physical review letters 120.4 (2018): 042003. ↩︎

de Oliveira, Luke, Michela Paganini, and Benjamin Nachman. “Learning particle physics by example: location-aware generative adversarial networks for physics synthesis.” Computing and Software for Big Science 1.1 (2017): 4. ↩︎

Paganini, Michela, Luke de Oliveira, and Benjamin Nachman. “CaloGAN: Simulating 3D high energy particle showers in multilayer electromagnetic calorimeters with generative adversarial networks.” Physical Review D 97.1 (2018): 014021. ↩︎

Acosta, Fernando Torales, et al. “Comparison of point cloud and image-based models for calorimeter fast simulation.” Journal of Instrumentation 19.05 (2024): P05003. ↩︎

Amram, Oz, and Kevin Pedro. “Denoising diffusion models with geometry adaptation for high fidelity calorimeter simulation.” Physical Review D 108.7 (2023): 072014. ↩︎

Buhmann, Erik, et al. “CaloClouds: fast geometry-independent highly-granular calorimeter simulation.” Journal of Instrumentation 18.11 (2023): P11025. ↩︎ ↩︎

Buhmann, Erik, et al. “CaloClouds II: ultra-fast geometry-independent highly-granular calorimeter simulation.” Journal of Instrumentation 19.04 (2024): P04020. ↩︎

Cresswell, Jesse C., and Taewoo Kim. “Scaling Up Diffusion and Flow-based XGBoost Models.” arXiv preprint arXiv:2408.16046 (2024). ↩︎

Madula, T., and V. M. Mikuni. “CaloLatent: Score-based Generative Modelling in the Latent Space for Calorimeter Shower Generation NeurIPS Workshop on Machine Learning and the Physical Sciences URL https://ml4physicalsciences. github. io/2023/files.” NeurIPS_ ML4PS_2023_19. pdf (2023). ↩︎

Mikuni, Vinicius, and Benjamin Nachman. “Score-based generative models for calorimeter shower simulation.” Physical Review D 106.9 (2022): 092009. ↩︎

Mikuni, Vinicius, and Benjamin Nachman. “CaloScore v2: single-shot calorimeter shower simulation with diffusion models.” Journal of Instrumentation 19.02 (2024): P02001. ↩︎ ↩︎

Favaro, Luigi, et al. “CaloDREAM–Detector Response Emulation via Attentive flow Matching.” arXiv preprint arXiv:2405.09629 (2024). ↩︎ ↩︎

Cresswell, Jesse C., et al. “CaloMan: Fast generation of calorimeter showers with density estimation on learned manifolds.” arXiv preprint arXiv:2211.15380 (2022). ↩︎

Buhmann, Erik, et al. “Decoding photons: Physics in the latent space of a BIB-AE generative network.” EPJ Web of Conferences. Vol. 251. EDP Sciences, 2021. ↩︎

Buhmann, Erik, et al. “Getting high: High fidelity simulation of high granularity calorimeters with high speed.” Computing and Software for Big Science 5.1 (2021): 13. ↩︎

Diefenbacher, Sascha, et al. “New angles on fast calorimeter shower simulation.” Machine Learning: Science and Technology 4.3 (2023): 035044. ↩︎

Salamani, Dalila, Anna Zaborowska, and Witold Pokorski. “MetaHEP: Meta learning for fast shower simulation of high energy physics experiments.” Physics Letters B 844 (2023): 138079. ↩︎

Abhishek, Abhishek, et al. “CaloDVAE: Discrete variational autoencoders for fast calorimeter shower simulation.” arXiv preprint arXiv:2210.07430 (2022). ↩︎

Caloqvae: Simulating high-energy particle calorimeter interactions using hybrid quantum-classical generative models ↩︎

Hoque, Sehmimul, et al. “CaloQVAE: Simulating high-energy particle-calorimeter interactions using hybrid quantum-classical generative models.” The European Physical Journal C 84.12 (2024): 1-7. ↩︎ ↩︎

Lu, Ian, et al. “Zephyr quantum-assisted hierarchical Calo4pQVAE for particle-calorimeter interactions.” arXiv preprint arXiv:2412.04677 (2024). ↩︎

Krause, Claudius, and David Shih. “Fast and accurate simulations of calorimeter showers with normalizing flows.” Physical Review D 107.11 (2023): 113003. ↩︎

Krause, Claudius, Ian Pang, and David Shih. “CaloFlow for CaloChallenge dataset 1.” SciPost Physics 16.5 (2024): 126. ↩︎

Buckley, Matthew R., et al. “Inductive simulation of calorimeter showers with normalizing flows.” Physical Review D 109.3 (2024): 033006. ↩︎

Diefenbacher, S., et al. “L2LFlows: generating high-fidelity 3D calorimeter images (2023).” arXiv preprint arXiv:2302.11594 18: P10017. ↩︎

Ernst, Florian, et al. “Normalizing flows for high-dimensional detector simulations.” arXiv preprint arXiv:2312.09290 (2023). ↩︎ ↩︎

Liu, Junze, et al. “Geometry-aware autoregressive models for calorimeter shower simulations.” arXiv preprint arXiv:2212.08233 (2022). ↩︎

Schnake, Simon, Dirk Krücker, and Kerstin Borras. “CaloPointFlow II generating calorimeter showers as point clouds.” arXiv preprint arXiv:2403.15782 (2024). ↩︎

Ahmad, Farzana Yasmin, Vanamala Venkataswamy, and Geoffrey Fox. “A comprehensive evaluation of generative models in calorimeter shower simulation.” arXiv preprint arXiv:2406.12898 (2024). ↩︎ ↩︎

Krause, Claudius, et al. “Calochallenge 2022: A community challenge for fast calorimeter simulation.” arXiv preprint arXiv:2410.21611 (2024). ↩︎

Ahmad, F. Y. Generated Samples of Dataset 2 from Calochallenge_2022. Zenodo, 17 Feb. 2025, doi:10.5281/zenodo.14883798. ↩︎

CaloChallenge Homepage*, calochallenge.github.io/homepage/. Accessed 3 Mar. 2025. ↩︎ ↩︎ ↩︎

GitHub: https://github.com/Aaheer17/Benchmarking_Calorimeter_Shower_Simulation_Generative_AI/tree/main ↩︎

Michele Faucci Giannelli, Gregor Kasieczka, Claudius Krause, Ben Nachman, Dalila Salamani, David Shih, Anna Zaborowska, Fast calorimeter simulation challenge 2022 - dataset 1,2 and 3 [data set]. zenodo., https://doi.org/10.5281/zenodo.8099322, https://doi.org/10.5281/zenodo.6366271, https://doi.org/10.5281/zenodo.6366324 (2022). ↩︎ ↩︎

ATLAS Collaboration, ATLAS software and computing HL-LHC roadmap, Tech. Rep. (Technical report, CERN, Geneva. http://cds.cern.ch/record/2802918, 2022). ↩︎ ↩︎ ↩︎

Conditioned quantum-assisted deep generative surrogate for particle-calorimeter interactions, J Quetzalcoatl Toledo-Marin, Sebastian Gonzalez, Hao Jia, Ian Lu, Deniz Sogutlu, Abhishek Abhishek, Colin Gay, Eric Paquet, Roger Melko, Geoffrey C Fox, Maximilian Swiatlowski, Wojciech Fedorko, 2024/10/30 arXiv preprint arXiv:2410.22870, Abstract: Particle collisions at accelerators such as the Large Hadron Collider, recorded and analyzed by experiments such as ATLAS and CMS, enable exquisite measurements of the Standard Model and searches for new phenomena. Simulations of collision events at these detectors have played a pivotal role in shaping the design of future experiments and analyzing ongoing ones. However, the quest for accuracy in Large Hadron Collider (LHC) collisions comes at an imposing computational cost, with projections estimating the need for millions of CPU-years annually during the High Luminosity LHC (HL-LHC) run 42. Simulating a single LHC event with Geant4 currently devours around 1000 CPU seconds, with simulations of the calorimeter subdetectors in particular imposing substantial computational demands 42. To address this challenge, we propose a conditioned quantum-assisted deep generative model. Our model integrates a conditioned variational autoencoder (VAE) on the exterior with a conditioned Restricted Boltzmann Machine (RBM) in the latent space, providing enhanced expressiveness compared to conventional VAEs. The RBM nodes and connections are meticulously engineered to enable the use of qubits and couplers on D-Wave’s Pegasus-structured \textit{Advantage} quantum annealer (QA) for sampling. We introduce a novel method for conditioning the quantum-assisted RBM using flux biases. We further propose a novel adaptive mapping to estimate the effective inverse temperature in quantum annealers. The effectiveness of our framework is illustrated using Dataset 2 of the CaloChallenge 41. ↩︎

Calorimeter Surrogate Research, Geoffrey Fox University of Virginia, 2024 https://docs.google.com/document/d/19g0Avj9SYbVH7qSxoVUnnFKeGMuBdD9JCHVmBQB466M/ ↩︎

Poster: https://drive.google.com/file/d/1PUiNDju_8N_wsDKI_W-g-jyCHb_5Hepo/ ↩︎

Extended abstract: Correlation Frobenius Distance: A Metric for Evaluating Generative Models in Calorimeter Shower Simulation, Farzana Yasmin Ahmada, Vanamala Venkataswamya, Geoffrey Fox, University of Virginia, https://docs.google.com/document/d/1ndHkJY41_pHYZZne58B4_7HJQKTCxPzeMWVMJ0bsnOE ↩︎

6.3 - Virtual tissue

Overview

Neural networks (NNs) have been demonstrated to be a viable alternative to traditional direct numerical evaluation algorithms, with the potential to accelerate computational time by several orders of magnitude. In the present paper we study the use of encoder-decoder convolutional neural network (CNN) algorithms as surrogates for steady-state diffusion solvers. The construction of such surrogates requires the selection of an appropriate task, network architecture, training set structure and size, loss function, and training algorithm hyperparameters. It is well known that each of these factors can have a significant impact on the performance of the resultant model. Our approach employs an encoder-decoder CNN architecture, which we posit is particularly wellsuited for this task due to its ability to effectively transform data, as opposed to merely compressing it. We systematically evaluate a range of loss functions, hyperparameters, and training set sizes. Our results indicate that increasing the size of the training set has a substantial effect on reducing performance fluctuations and overall error. Additionally, we observe that the performance of the model exhibits a logarithmic dependence on the training set size. Furthermore, we investigate the effect on model performance by using different subsets of data with varying features. Our results highlight the importance of sampling the configurational space in an optimal manner, as this can have a significant impact on the performance of the model and the required training time. In conclusion, our results suggest that training a model with a pre-determined error performance bound is not a viable approach, as it does not guarantee that edge cases with errors larger than the bound do not exist. Furthermore, as most surrogate tasks involve a high dimensional landscape, an ever increasing training set size is, in principle, needed, however it is not a practical solution.

Figure 1: Sketch of the NN architecture for virtual tissue surrogate.

References

Analyzing the Performance of Deep Encoder-Decoder Networks as Surrogates for a Diffusion Equation, J. Quetzalcoatl Toledo-Marin, James A. Glazier, Geoffrey Fox https://arxiv.org/pdf/2302.03786.pdf> ↩︎

There is an earlier surrogate referred to in this arxiv. It was published: Toledo-Marín J. Quetzalcóatl , Fox Geoffrey , Sluka James P. , Glazier James A., Deep Learning Approaches to Surrogates for Solving the Diffusion Equation for Mechanistic Real-World Simulations,Frontiers in Physiology, Vol. 12, 2021 doi: 10.3389/fphys.2021.667828, ISSNI 1664-042X, https://www.frontiersin.org/journals/physiology/articles/10.3389/fphys.2021.667828 ↩︎

6.4 - Cosmoflow

Metadata

Model cosmoflow.json

Datasets

cosmoUniverse_2019_05_4parE_tf_v2.json

cosmoUniverse_2019_05_4parE_tf_v2_mini.json

Overview

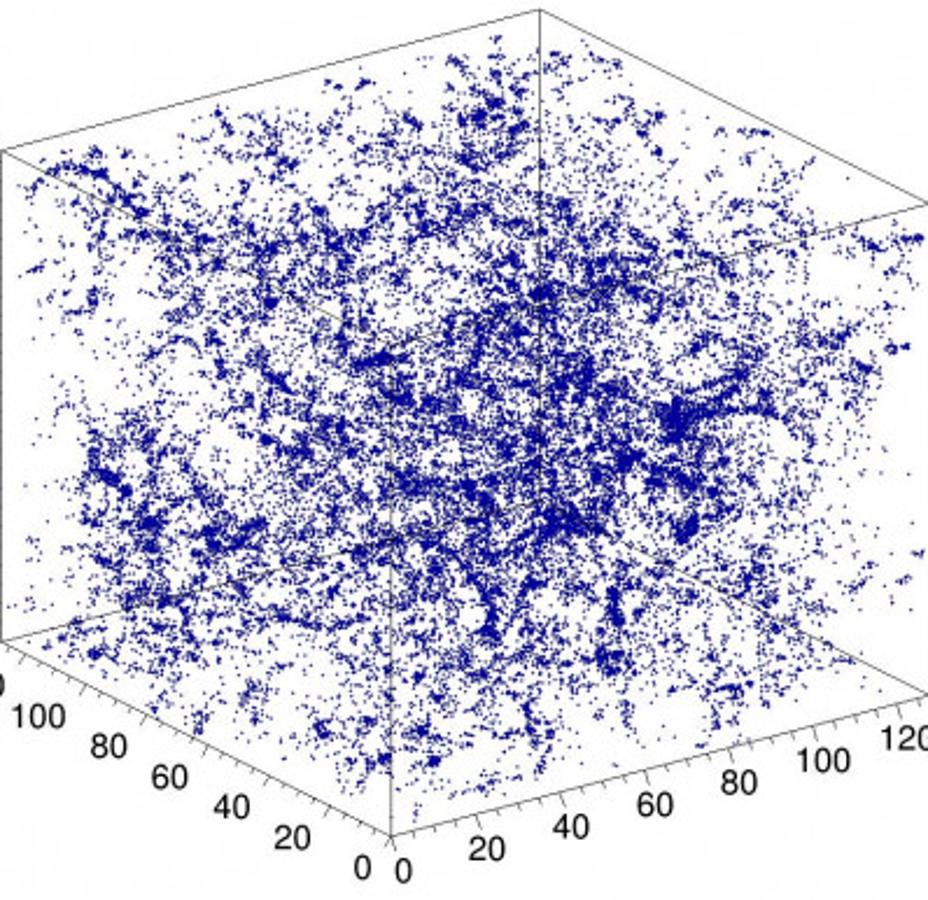

This application is based on the original CosmoFlow paper presented at SC18 and continued by the ExaLearn project, and adopted as a benchmark in the MLPerf HPC suite. It involves training a 3D convolutional neural network on N-body cosmology simulation data to predict physical parameters of the universe. The reference implementation for MLPerf HPC v0.5 CosmoFlow uses TensorFlow with the Keras API and Horovod for data-parallel distributed training. The dataset comes from simulations run by ExaLearn, with universe volumes split into cubes of size 128x128x128 with 4 redshift bins. The total dataset volume preprocessed for MLPerf HPC v0.5 in TFRecord format is 5.1 TB. The target objective in MLPerf HPC v0.5 is to train the model to a validation mean-average-error < 0.124. However, the problem size can be scaled down and the training throughput can be used as the primary objective for a small scale or shorter timescale benchmark.123

Figure 1: Example simulation of dark matter in the universe used as input to the CosmoFlow network. Copied from [NERSC](https://www.nersc.gov/news-publications/nersc-news/science-news/2018/nersc-intel-cray-harness-the-power-of-deep-learning-to-better-understand-the-universe/)

References

6.5 - Fully ionized plasma fluid model closures

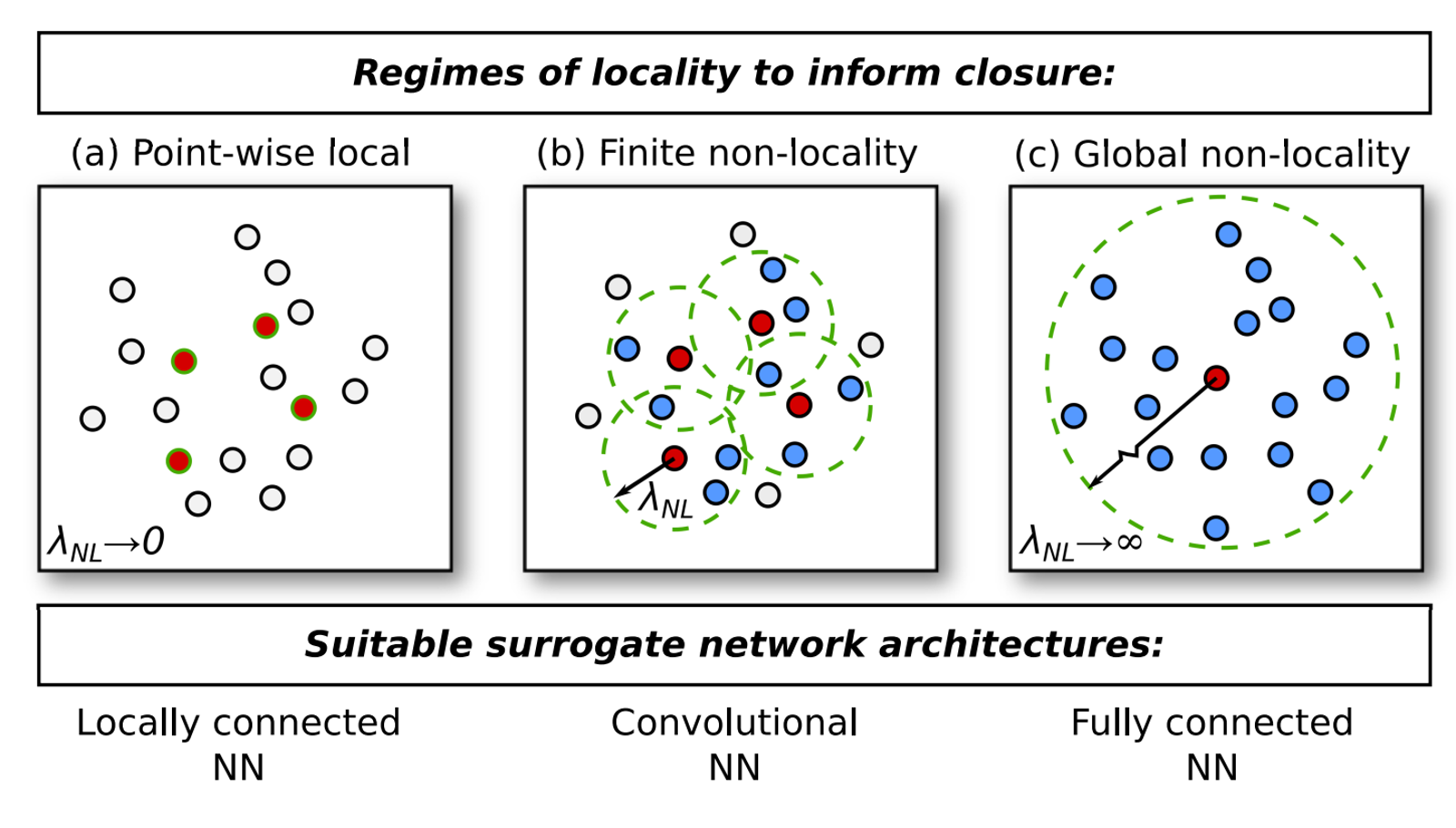

Fully ionized plasma fluid model closures (Argonne):1 The closure problem in fluid modeling is a well-known challenge to modelers aiming to accurately describe their system of interest. Analytic formulations in a wide range of regimes exist but a practical, generalized fluid closure for magnetized plasmas remains an elusive goal. There are scenarios where complex physics prevents a simple closure being assumed, and the question as to what closure to employ has a non-trivial answer. In a proof-of-concept study, Argonne researchers turned to machine learning to try to construct surrogate closure models that map the known macroscopic variables in a fluid model to the higher-order moments that must be closed. In their study, the researchers considered three closures: Braginskii, Hammett-Perkins, and Guo-Tang; for each of them, they tried three types of ANNs: locally connected, convolutional, and fully connected. Applying a physics-informed machine learning approach, they found that there is a benefit to tailoring a specific network architecture informed by the physics of the plasma regime each closure is designed for, rather than carelessly applying an unnecessarily complex general network architecture. will choose one of the surrogates and bring it up an early example for SBI with reference implementation and tutorial documentation. As a follow-up, the Argonne team will tackle more challenging problems.

Figure 1: Simple schematic of varying classes of closure formulations.

References

R. Maulik, N. A. Garland, X.-Z. Tang, and P. Balaprakash, “Neural network representability of fully ionized plasma fluid model closures,” arXiv [physics.comp-ph], 10-Feb-2020 [Online]. Available: http://arxiv.org/abs/2002.04106 ↩︎

6.6 - Ions in nanoconfinement

Metadata

Model nanoconfinement.json

Datasets nanoconfinement.json

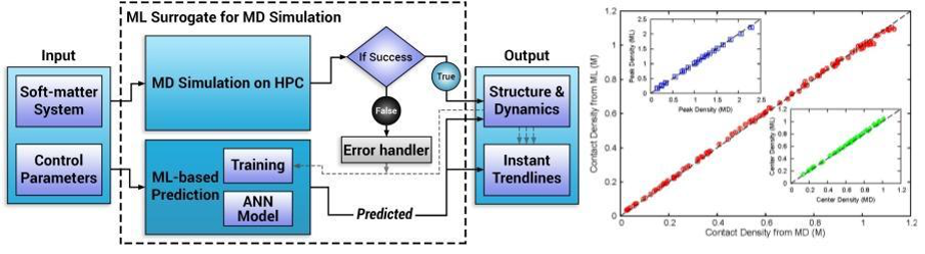

This application 1 2 3 studies ionic structure in electrolyte solutions in nanochannels with planar uncharged surfaces and can use multiple molecular dynamics (MD) codes including LAMMPS 4 which run on HPC supercomputers with OpenMP and MPI parallelization.

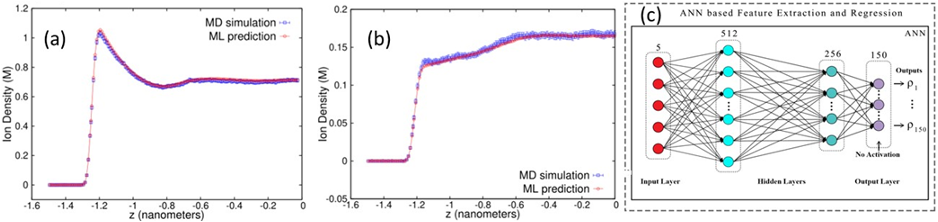

A dense neural-net (NN) was used to learn 150 final state characteristics based on the input of 5 parameters with typical results shown in fig. 2(b) with the NN results for three important densities tracking well the MD simulation results for a wide range of unseen input system parameters. Fig. 3(a,b) shows two typical density profiles with again the NN prediction tracking well the simulation. Input quantities were confinement length, positive ion valency, negative ion valency, salt concentration, and ion diameter. Figure 2(a) shows the runtime architecture for dynamic use and update of the NN and our middleware discussed in Sec. 3.2.6 will generalize this. The inference time for this on a single core is 104 times faster than the parallel code which is itself 100 times the sequential code. This surrogate approach is the first-of-its-kind in the area of simulating charged soft-matter systems and there are many other published papers in both biomolecular and material science presenting similar successful surrogates 5 with a NN architecture similar to fig. 3(c).

Fig. 2 a) Architecture of dynamic training of ML surrogate and b) Comparison of three final state densities (peak, contact, and center) between MD simulations and NN surrogate predictions [^5] [^51].

Fig. 3 (a,b) Two density profiles of confined ions for very different input parameters and comparing MD and NN. (c) Fully connected deep learning network used to learn the final densities. ReLU activation units are in the 512 and 256 node hidden layers. The output values were learned on 150 nodes.

References

JCS Kadupitiya , Geoffrey C. Fox , and Vikram Jadhao, “Machine learning for performance enhancement of molecular dynamics simulations,” in International Conference on Computational Science ICCS2019, Faro, Algarve, Portugal, 2019 [Online]. Available: http://dsc.soic.indiana.edu/publications/ICCS8.pdf ↩︎

J. C. S. Kadupitiya, F. Sun, G. Fox, and V. Jadhao, “Machine learning surrogates for molecular dynamics simulations of soft materials,” J. Comput. Sci., vol. 42, p. 101107, Apr. 2020 [Online]. Available: http://www.sciencedirect.com/science/article/pii/S1877750319310609 ↩︎

“Molecular Dynamics for Nanoconfinement.” [Online]. Available: https://github.com/softmaterialslab/nanoconfinement-md. [Accessed: 11-May-2020] ↩︎

S. Plimpton, “Fast Parallel Algorithms for Short Range Molecular Dynamics,” J. Comput. Phys., vol. 117, pp. 1–19, 1995 [Online]. Available: http://faculty.chas.uni.edu/~rothm/Modeling/Parallel/Plimpton.pdf ↩︎

Geoffrey Fox, Shantenu Jha, “Learning Everywhere: A Taxonomy for the Integration of Machine Learning and Simulations,” in IEEE eScience 2019 Conference, San Diego, California [Online]. Available: https://arxiv.org/abs/1909.13340 ↩︎

6.7 - miniWeatherML

Metadata

Model miniWeatherML.json

Datasets miniWeatherML.json

Overview

MiniWeatherML is a playground for learning and developing Machine Learning (ML) surrogate models and workflows. It is based on a simplified weather model simulating flows such as supercells that are realistic enough to be challenging and simple enough for rapid prototyping in:

- Data generation and curation

- Machine Learning model training

- ML model deployment and analysis

- End-to-end workflows

Figure 1: CANcer Distributed Learning Environment

References

6.8 - OSMI

Overview

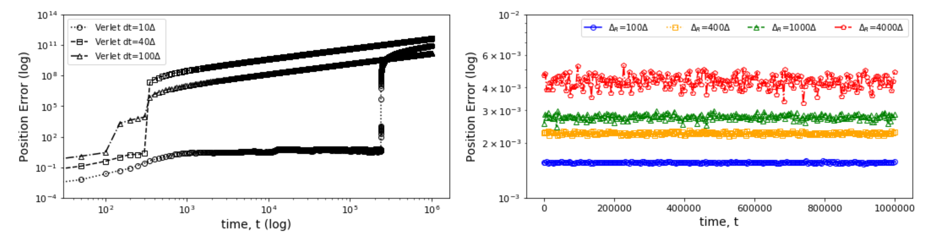

We explore the relationship between certain network configurations and the performance of distributed Machine Learning systems. We build upon the Open Surrogate Model Inference (OSMI) Benchmark, a distributed inference benchmark for analyzing the performance of machine-learned surrogate models developed by Wes Brewer et. Al. We focus on analyzing distributed machine-learning systems, via machine-learned surrogate models, across varied hardware environments. By deploying the OSMI Benchmark on platforms like Rivanna HPC, WSL, and Ubuntu, we offer a comprehensive study of system performance under different configurations. The paper presents insights into optimizing distributed machine learning systems, enhancing their scalability and efficiency. We also develope a framework for automating the OSMI benchmark.

Introdcution

With the proliferation of machine learning as a tool for science, the need for efficient and scalable systems is paramount. This paper explores the Open Surrogate Model Inference (OSMI) Benchmark, a tool for testing the performance of machine-learning systems via machine-learned surrogate models. The OSMI Benchmark, originally created by Wes Brewer and colleagues, serves to evaluate various configurations and their impact on system performance.

Our research pivots around the deployment and analysis of the OSMI Benchmark across various hardware platforms, including the high-performance computing (HPC) system Rivanna, Windows Subsystem for Linux (WSL), and Ubuntu environments.

In each experiment, there are a variable number of TensorFlow model server instances, overseen by a HAProxy load balancer that distributes inference requests among the servers. Each server instance operates on a dedicated GPU, choosing between the V100 or A100 GPUs available on Rivanna. This setup mirrors real-world scenarios where load balancing is crucial for system efficiency.

On the client side, we initiate a variable number of concurrent clients executing the OSMI benchmark to simulate different levels of system load and analyze the corresponding inference throughput.

On top of the original OSMI-Bench, we implemented an object-oriented interface in Python for running experiments with ease, streamlining the process of benchmarking and analysis. The experiments rely on custom-built images based on NVIDIA’s tensorflow image. The code works on several hardwares, assuming the proper images are built.

Additionally, We develop a script for launching simultaneous experiments with permutations of pre-defined parameters with Cloudmesh Experiment-Executor. The Experiment Executor is a tool that automates the generation and execution of experiment variations with different parameters. This automation is crucial for conducting tests across a spectrum of scenarios.

Finally, we analyze the inference throughput and total time for each experiment. By graphing and examining these results, we draw critical insights into the performance dynamics of distributed machine learning systems.

In summary, a comprehensive examination of the OSMI Benchmark in diverse distributed ML systems is provided. We aim to contribute to the optimization of these systems, by providing a framework for finding the best performant system configuration for a given use case. Our findings pave the way for more efficient and scalable distributed computing environments.

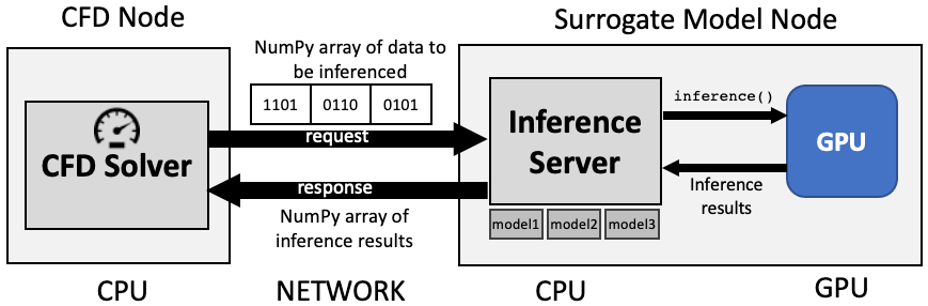

The architectural view of the benchmarks are depictued in Figure 1 and Figure 2.

Figure 1: Surrogate calculations via a Inference Server.

Figure 2: Possible benchmark configurations to measure sped of parallel iference.

References

Brewer, Wesley, Daniel Martinez, Mathew Boyer, Dylan Jude, Andy Wissink, Ben Parsons, Junqi Yin, and Valentine Anantharaj. “Production Deployment of Machine-Learned Rotorcraft Surrogate Models on HPC.” In 2021 IEEE/ACM Workshop on Machine Learning in High Performance Computing Environments (MLHPC), pp. 21-32. IEEE, 2021, https://ieeexplore.ieee.org/abstract/document/9652868, Note that OSMI-Bench differs from SMI-Bench described in the paper only in that the models that are used in OSMI are trained on synthetic data, whereas the models in SMI were trained using data from proprietary CFD simulations. Also, the OSMI medium and large models are very similar architectures as the SMI medium and large models, but not identical. ↩︎

Brewer, Wesley, Greg Behm, Alan Scheinine, Ben Parsons, Wesley Emeneker, and Robert P. Trevino. “iBench: a distributed inference simulation and benchmark suite.” In 2020 IEEE High Performance Extreme Computing Conference (HPEC), pp. 1-6. IEEE, 2020. ↩︎

Brewer, Wesley, Greg Behm, Alan Scheinine, Ben Parsons, Wesley Emeneker, and Robert P. Trevino. “Inference benchmarking on HPC systems.” In 2020 IEEE High Performance Extreme Computing Conference (HPEC), pp. 1-9. IEEE, 2020. ↩︎

Brewer, Wesley, Chris Geyer, Dardo Kleiner, and Connor Horne. “Streaming Detection and Classification Performance of a POWER9 Edge Supercomputer.” In 2021 IEEE High Performance Extreme Computing Conference (HPEC), pp. 1-7. IEEE, 2021. ↩︎

Gregor von Laszewski, J. P. Fleischer, and Geoffrey C. Fox. 2022. Hybrid Reusable Computational Analytics Workflow Management with Cloudmesh. https://doi.org/10.48550/ARXIV.2210.16941 ↩︎

6.9 - Particle dynamics

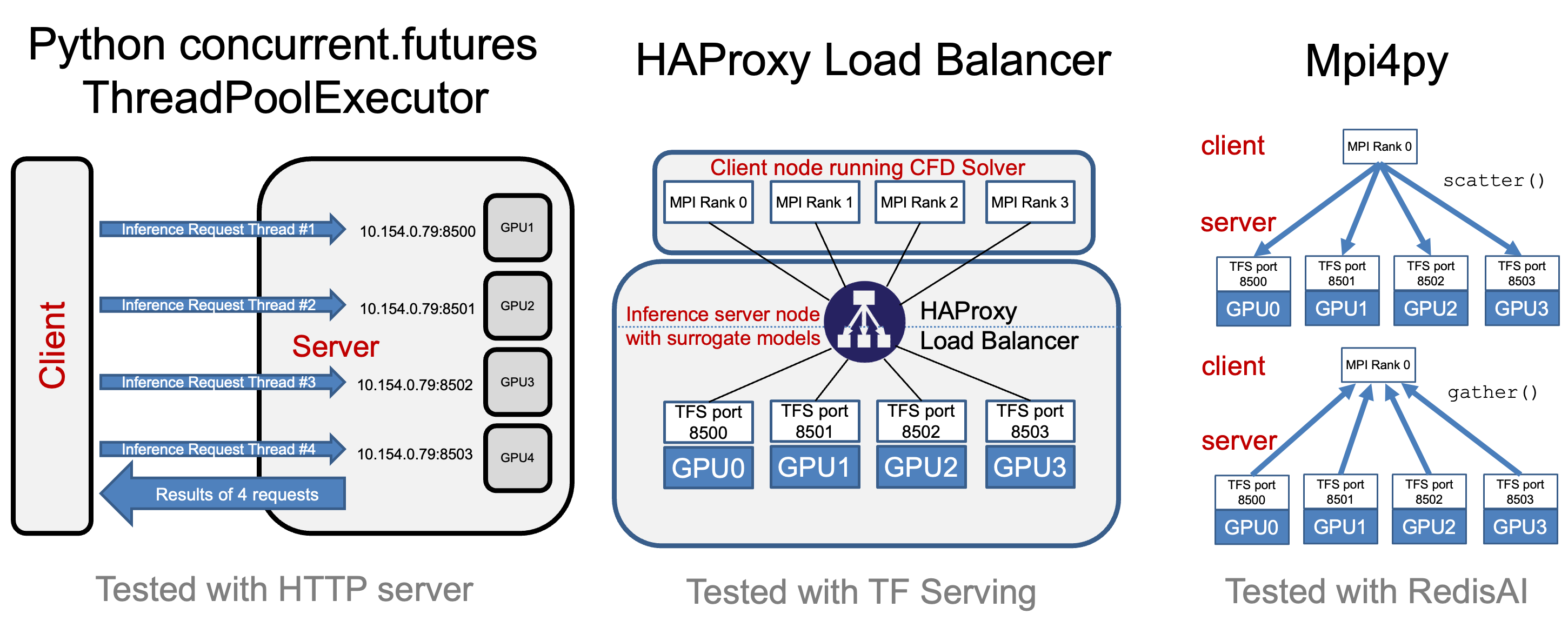

Recurrent Neural Nets as a Particle Dynamics Integrator

The second IU initial application shows a rather different type of surrogate and illustrates an SBI goal to collect benchmarks covering a range of surrogate designs. Molecular dynamics simulations rely on numerical integrators such as Verlet to solve Newton’s equations of motion. Using a sufficiently small time step to avoid discretization errors, Verlet integrators generate a trajectory of particle positions as solutions to the equations of motions. In 1, 2, 3, the IU team introduces an integrator based on recurrent neural networks that is trained on trajectories generated using the Verlet integrator and learns to propagate the dynamics of particles with timestep up to 4000 times larger compared to the Verlet timestep. As shown in Fig. 4 (right) the error does not increase as one evolves the system for the surrogate while standard Verlet integration in Fig. 4 (left) has unacceptable errors even for time steps of just 10 times that used in an accurate simulation. The surrogate demonstrates a significant net speedup over Verlet of up to 32000 for few-particle (1 - 16) 3D systems and over a variety of force fields including the Lennard-Jones (LJ) potential. This application uses a recurrent plus dense neural network architecture and illustrates an important approach to learning evolution operators which can be applied across a variety of fields including Earthquake science (IU work in progress) and Fusion 4.

Fig. 4: Average error in position updates for 16 particles interacting with an LJ potential, The left figure is standard MD with error increasing for ∆t as 10, 40, or 100 times robust choice (0.001). On the right is the LSTM network with modest error up to t = 106 even for ∆t = 4000 times the robust MD choice.

References

JCS Kadupitiya, Geoffrey C. Fox, Vikram Jadhao, “GitHub repository for Simulating Molecular Dynamics with Large Timesteps using Recurrent Neural Networks.” [Online]. Available: https://github.com/softmaterialslab/RNN-MD. [Accessed: 01-May-2020] ↩︎

J. C. S. Kadupitiya, G. C. Fox, and V. Jadhao, “Simulating Molecular Dynamics with Large Timesteps using Recurrent Neural Networks,” arXiv [physics.comp-ph], 12-Apr-2020 [Online]. Available: http://arxiv.org/abs/2004.06493 ↩︎

J. C. S. Kadupitiya, G. Fox, and V. Jadhao, “Recurrent Neural Networks Based Integrators for Molecular Dynamics Simulations,” in APS March Meeting 2020, 2020 [Online]. Available: http://meetings.aps.org/Meeting/MAR20/Session/L45.2. [Accessed: 23-Feb-2020] ↩︎

J. Kates-Harbeck, A. Svyatkovskiy, and W. Tang, “Predicting disruptive instabilities in controlled fusion plasmas through deep learning,” Nature, vol. 568, no. 7753, pp. 526–531, Apr. 2019 [Online]. Available: https://doi.org/10.1038/s41586-019-1116-4 ↩︎

6.10 - PtychoNN: deep learning network for ptychographic imaging that predicts sample amplitude and phase from diffraction data.

Metadata

Model ptychonn.json

Datasets ptychonn_20191008_39.json

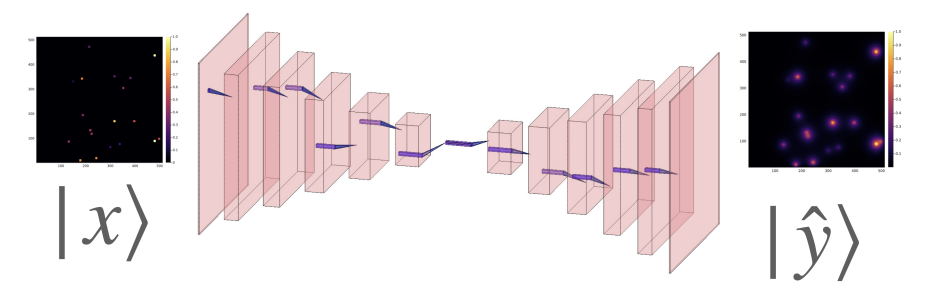

PtychoNN, uses a deep convolutional neural network to predict realspace structure and phase from far-field diffraction data. It recovers high fidelity amplitude and phase contrast images of a real sample hundreds of times faster than current ptychography reconstruction packages and reduces sampling requirements 1

References

Mathew J. Cherukara, Tao Zhou, Youssef Nashed, Pablo Enfedaque, Alex Hexemer, Ross J. Harder, Martin V. Holt; AI-enabled high-resolution scanning coherent diffraction imaging. Appl. Phys. Lett. 27 July 2020; 117 (4): 044103. https://doi.org/10.1063/5.0013065 ↩︎

7 - Software

A list of software we use to make things easiers

7.1 - cloudmesh

Overview

Cloudmesh allows the creation of an extensible commandline and commandshell tool based internally on a number of python APIs that can be loaded conveniently through plugins.

Plugins useful for this effort include

- cloudmesh-vpn1 – a convenient way to configure VPN

- cloudmesh-common2 – useful common libraries including a StopWatch for benchmarking

- cloudmesh-cmd53 – a plugin manager that allows plugins to be integrated as commandline tool or command shell

- cloudmesh-ee4 – A pluging to create AI grid searchs using LSF and SLURM jobs

- cloudmesh-cc5 – A plugin to allow benchmarks to be run in coordination on heterogeneous compute resources and multiple clusters

- cloudmesh-apptainer6 – mangae apptainers via a Python API

Cloudmesh has over 100 plugins coordinated at http://github.com/cloudmesh

References

Gregor von Laszewski, J. P. Fleischer, and Geoffrey C. Fox. 2022. Hybrid Reusable Computational Analytics Workflow Management with Cloudmesh. https://doi.org/10.48550/ARXIV.2210.16941 ↩︎

7.2 - sabath

Introduction

SABATH provides benchmarking infrastructure for evaluating scientific ML/AI models. It contains support for scientific machine learning surrogates from external repositories such as SciML-Bench.

The software dependences are explicitly exposed in the surrogate model definition, which allows the use of advanced optimization, communication, and hardware features. For example, distributed, multi-GPU training may be enabled with Horovod. Surrogate models may be implemented using TensorFlow, PyTorch, or MXNET frameworks.

Models

Models are collected so far at

References

8 - Meeting Notes

8.1 - Poster

We are happy to announce a poster about the SBI FAIR project.

Poster

https://sbi-fair.github.io/docs/notes/poster/sbi-fair-poster-finalv2.pdf

8.2 - Links

Overall Project Links

- Google-group: https://groups.google.com/g/sbi-fair

- Website: https://sbi-fair.github.io/

- Publications: https://sbi-fair.github.io/docs/publications/

- The directory from proposal writing: DOE_FAIR2020-Surrogates

- Directory for this proposal: Afteraward

- Meeting Summaries Report:: https://docs.google.com/document/d/1cqMOkV9Cag6EB6HI6fR20gwhVwUeG5yijtJ3aEW0Crs

8.3 - Meeting Notes 02-05-2024

Notes

Virginia

Virginia started a list of surrogates that would help prepare any poster necessary https://docs.google.com/presentation/d/1LonfbydMlQyLBv5vh8tjATv9BxdN7GmjuU8RFyuK5aw/edit#slide=id.g2acfd0f37ff_1_151

Virginia status is https://docs.google.com/presentation/d/1LonfbydMlQyLBv5vh8tjATv9BxdN7GmjuU8RFyuK5aw/edit#slide=id.g2acfd0f37ff_1_100 and other slides here plus https://docs.google.com/presentation/d/1fqKphJlK4Q_zE1wIAxHs73c4LHFjAKzjXIiMSS_opnw/edit?usp=sharing

Web page https://sbi-fair.github.io/

Rutgers

ASCR-PI-Meeting-Feb-2024-Rutgers

Indiana

- Indiana has 2 surrogates.

- Ions in nano confinement.This code allows users to simulate ions confined between material surfaces that are nanometers apart, and extract the associated ionic structure.

time evolution: GitHub: Code for our paper “Simulating Molecular Dynamics with Large Timesteps using Recurrent Neural Networks”

See powerpoint sbi_Jadhao_2024.pptx

ANL

UTK

Other

Last Joint Presentation SBI DOE Presentation November 28 2022.pptx

The poster is FoxG_FAIR Surrogate Benchmarks .pptx or Abstract 250 words

Replacing traditional HPC computations with deep learning surrogates can dramatically improve the performance of simulations. We need to build repositories for AI models, datasets, and results that are easily used with FAIR metadata. These must cover a broad spectrum of use cases and system issues. The need for heterogeneous architectures means new software and performance issues. Further surrogate performance models are needed. The SBI (Surrogate Benchmark Initiative) collaboration between Argonne National Lab, Indiana University, Rutgers, University of Tennessee, and Virginia (lead) with MLCommons addresses these issues. The collaboration accumulates existing and generates new surrogates and hosts them (a total of around 20) in repositories. Selected surrogates become MLCommons benchmarks. The surrogates are managed by a FAIR metadata system, SABATH, developed by Tennessee and implemented for our repositories by Virginia.

The surrogate domains are Bragg coherent diffraction imaging, ptychographic imaging, Fully ionized plasma fluid model closures, molecular dynamics(2),

turbulence in computational fluid dynamics, cosmology, Kaggle calorimeter challenge(4), virtual tissue simulations(2), and performance tuning. Rutgers built a taxonomy using previous work and protein-ligand docking, which will be quantified using six mini-apps representing the system structure for different surrogate uses. Argonne has studied the data-loading and I/O structure for deep learning using inter-epoch and intra-batch reordering to improve data reuse. Their system addresses communication with the aggregation of small messages. They also study second-order optimizers using compression balancing accuracy and compression level. Virginia has used I/O parallelization to further improve performance. Indiana looked at ways of reducing the needed training set size for a given surrogate accuracy.

[1] Web Page for Surrogate Benchmark Initiative SBI: FAIR Surrogate Benchmarks Supporting AI and Simulation Research. Web Page, January 2024. URL: https://sbi-fair.github.io/. [2] E. A. Huerta, Ben Blaiszik, L. Catherine Brinson, Kristofer E. Bouchard, Daniel Diaz, Cate- rina Doglioni, Javier M. Duarte, Murali Emani, Ian Foster, Geoffrey Fox, Philip Harris, Lukas Heinrich, Shantenu Jha, Daniel S. Katz, Volodymyr Kindratenko, Christine R. Kirk- patrick, Kati Lassila-Perini, Ravi K. Madduri, Mark S. Neubauer, Fotis E. Psomopoulos, Avik Roy, Oliver R ̈ubel, Zhizhen Zhao, and Ruike Zhu. Fair for ai: An interdisciplinary and international community building perspective. Scientific Data, 10(1):487, 2023. URL: https://doi.org/10.1038/s41597-023-02298-6. Note: More references can be found on the Web site

Latex version https://www.overleaf.com/project/65b7e7262188975739dae845 with PDF FoxG_FAIR Surrogate Benchmarks _abstract.pdf https://drive.google.com/file/d/1ytrrii09tKKS2AAVuUTKGw8tmM2Xf8-N/view?usp=drive_link

Topics

Fitting of hardware and software to surrogates Uncertainty Quantification of the surrogate estimates Minimize Training Data Size needed to get reliable surrogates for a given accuracy choice. Develop and test surrogate Performance Models Findable, Accessible, Interoperable, and Reusable FAIR data ecosystem for HPC surrogates SBI collaborates with Industry and a leading machine learning benchmarking activity – MLPerf/MLCommons

Rutgers 2 slides Detailed example: AI-accelerated Protein-Ligand Docking Taxonomy and 6 mini-apps

Tennessee 6 slides SABATH structure and UTK use Cosmoflow in detail

Argonne 7 slides 5 slides High-Performance Data Loading Framework for Distributed DNN Training with Maximize data reuse: Inter-Epoch Reordering (InterER) has minimal impact on the accuracy. Intra-Batch Reordering (IntraBR) that has no impact on the accuracy. I/O balancing A strategy that aggregates small reads into a chunk read.

2 slides Scalable Communication Framework for Second-Order Optimizers using compression balancing accuracy and compression amount

Indiana Goal 1: Develop surrogates for nanoscale molecular dynamics (MD) simulations Surrogate for MD simulations of confined electrolyte ions Surrogate for time evolution operators in MD simulations

Goal 2: Investigate surrogate accuracy dependence on training dataset size

Virginia Work on I/O and Communicaion optimization Done Two Argonne one IU and one MLCommons

To do Onr argonne Fully ionized plasma fluid model closures Calorimeter Challenge: 3 (NF:CaloFlow, Diffusion:CaloDiffusion, CaloScore v2, VAEQVAE Last IU UTK Cosmoflow Performance Virtual Tissue (2) 6 Rutgers

8.4 - Meeting Notes 01-08-2024

Present: Geoffrey Fox, Gregor von Laszewski, Przemek Porebski, Piotr Luszczek, Shantenu Jha

Apologies Vikram Jadhao

- Shantenu described the background to the PI meeting for ASCR in February that was modeled on successful SCIDAC-wide meetings. It is not clear if sessions will be plenary or organized around Program manager portfolios.

- Virginia started a list of surrogates that would help prepare any poster necessary

- https://docs.google.com/presentation/d/1LonfbydMlQyLBv5vh8tjATv9BxdN7GmjuU8RFyuK5aw/edit#slide=id.g2acfd0f37ff_1_151

- Argonne would add work on I/O, compression, and second-order methods.

- Rutgers has surrogates to list, plus work on effective performance and their taxonomy of surrogate types.

- Indiana was not available due to travel, but has work on data dependence and surrogates for sustainability (a new paper).

- Tennessee has two surrogates, MiniWeatherML and Performance. Also has SABATH

- We did not set a next meeting until the PI meeting was clearer.

- Later email from DOE set the poster deadline as January 29.

8.5 - Meeting Notes 10-30-2023

Minutes of SBI-FAIR October 30 2023, Meeting

Present: Geoffrey Fox, Gregor von Laszewski, Przemek Porebski, Piotr Luszczek, Vikram Jadhao,** **Shantenu Jha, Margaret Lentz

- AI for Science report AI for Science, Energy, and Security Report | Argonne National Laboratory

- ASCAC Advanced Scientific Comput… | U.S. DOE Office of Science(SC)

- Hal Finkel’s (Director Research ASCR Advanced Scientific Computing talk ASCR Research Priorities is important

- Anticipated Solicitations in FY 2024

- Compared to FY 2023, expect a smaller number of larger, more-broadly-scoped solicitations driving innovation across ASCR’s research community.

- In appropriate areas, ASCR will expand its strategy of solicitating longer-term projects and, in most areas, encouraging partnerships between DOE National Laboratories, academic institutions, and industry.

- ASCR will continue to seek opportunities to expand the set of institutions represented in our portfolio and encourages our entire community to assist in this process by actively exploring potential collaborations with a diverse set of potential partners.

- Areas of interest include, but are not limited to:

- Applied mathematics and computer science targeting quantum computing across the full software stack.

- Applied mathematics and computer science focused on key topics in AI for Science, including scientific foundation models, decision support for complex systems, privacy-preserving federated AI systems, AI for digital twins, and AI for scientific programming.

- Microelectronics co-design combining innovation in materials, devices, systems, architectures, algorithms, and software (including through Microelectronics Research Centers).

- Correctness for scientific computing, data reduction, new visualization and collaboration paradigms, parallel discrete-event simulation, neuromorphic computing, and advanced wireless for science.

- Continued evolution of the scientific software ecosystem enabling community participation in exascale innovation, adoption of AI techniques, and accelerated research productivity.

- She noted the Executive order today, Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence | The White House, and this message (trustworthiness) will be reflected in DOE programs.

- Microelectronics will be a thrust

- NAIRR $140M is important

Rutgers

Shantenu Jha gave a thorough presentation

There were four items below with status given in **bold**

- Develop and Characterize Surrogates in the Context of NVBL Pipeline

- Published in Scientific Reports: Performance of Surrogate models without loss of accuracy (Stage 1 of NVBL Drug discovery pipeline) (Done)

- Performance & taxonomy of surrogates coupled to HPC (paper in a month) 2. Survey surrogates coupled to HPC simulations (Almost complete 2023-Q3) 3. Generalized framework of surrogate performance (Ongoing 2023-Q4) 1. Optimal Decision making in the DD pipeline (published)

- Tools (Ongoing) 4. Preliminary work on mini-apps under review; extend to FAIR mini-apps for surrogates taxonomy 5. Deployed on DOE leadership class machines

- Interact with MLCommons** (Anticipate start in 2023/Q4)**

6. Benchmarks for surrogate coupled to HPC workflows

Indiana

- Vikram Jadhao presented

- Accuracy speed up tradeoff for molecular dynamics surrogates

- Looking for datasets with errors

- Followed up with later discussions with Rutgers so can feed into software

Tennessee

- Piotr Luszczek gave presentation

- He reported on progress with SAbath and MiniWeatherML

- He is giving several presentations

Virginia

- **Presentation **

- We discussed progress with surrogates and enhancements to Sabath

- We discussed repository and noted that different models need different specific environments

- Requirements.txt will specify this

- Different target hardware needs to be supported

- OSMIBench will be released before end of year

- Support separate repositories in the future

- We discussed papers and, in particular, a poster at the Oak Ridge OLCF users meeting.

Argonne

- Finished the contract but will, of course, complete their papers.

8.6 - Meeting Notes 09-25-2023

Minutes of SBI-FAIR September 25 2023, Meeting

Present: Geoffrey Fox, Gregor von Laszewski, Przemek Porebski, Piotr Luszczek, Vikram Jadhao

Apologies: Shantenu Jha, Kamil Iskra, Margaret Lentz

Virginia

- **Presentation **

- Repository

- Specific environments are needed for different models

- Requirements.txt

- Different hardware support

- Copy MLCommons approach

- MLCube as a target

- Tools to generate targets

- Release before supercomputing

- Add MLCommons benchmarks

- Separate repositories in version 2

Argonne

- Finished the contract but will, of course, complete their papers.

Tennessee

- Piotr presented

- SABATH updates

- IBM-NASA Foundation model has multi-part datasets

- Cloudmesh uses SABATH

- Smokey Mountain presentation tomorrow

Rutgers

- See end of

- The first mini-app is ready

Indiana

- Will update the nanoconfinement app and Nanohub version still used

- Second surrogate being worked on

- Soft label work continuing

- Interested in AI for Instruments

- Surrogates help Sustainability as save energy

8.7 - Meeting Notes 08-25-2023

Minutes of SBI-FAIR August 28 2023, Meeting

- Monday, September 25, 2023, https://virginia.zoom.us/my/gc.fox

Present: Geoffrey Fox, Gregor von Laszewski, Przemek Porebski, Kamil Iskra,, Baixi Sun. Piotr Luszczek,

Apologies: Shantenu Jha, Vikram Jadhao, Margaret Lentz (Rutgers and Indiana not presented)

Virginia

- SABATH extensions

- OSMIBench improved

- Experiment Executor added in Cloudmesh

- Argonne surrogates supported

Argonne

- Baixi presented their new work

- SOLAR paper with artifacts submitted

- The communication bottleneck in the second order method K-FAC addressed with compression and sparsification methods with SSO Framework

Tennessee

- Piotr described Virginia’s enhancements

- IBM-NASA multi-part datasets in Foundation model

- Smokey Mountain Conference

- Integration with MLCommons Croissant using Schema.org

8.8 - Meeting Notes 07-31-2023

Minutes of SBI-FAIR July 31 2023, Meeting

- Monday, August 28, 2023, https://virginia.zoom.us/my/gc.fox

- Monday, September 25, 2023, https://virginia.zoom.us/my/gc.fox

Present: Geoffrey Fox, Gregor von Laszewski, Przemek Porebski, Kamil Iskra, Xiaodong Yu, Baixi Sun. Piotr Luszczek, Shantenu Jha

Apologies: Vikram Jadhao,

Virginia

- Geoffrey presented the Virginia Update https://docs.google.com/presentation/d/132erkV49Lgd0ZFx-AtNWJPRwTrxc480m-rU6jmvMmYA/edit?usp=sharing, which also included Indiana (see below)

- Good progress with Argonne Surrogates

- We have added PtychoNN to SABATH, and we have run AutoPhaseNN on Rivanna

- We reviewed other surrogates from Virginia including OSMIBench and a new Calorimeter simulation

- We are working well with Tennessee on SABATH

- Gregor finished with a little study on use of Rivanna – the Virginia Supercomputer

Indiana

- Vikram is still traveling in India and was not able to join today’s meeting. He shared by email the following updates.These are included in Virginia Presentation

- the nanoconfinement surrogate repository is updated with the latest results from the sample size study published in JCTC, Probing Accuracy-Speedup Tradeoff in Machine Learning Surrogates for Molecular Dynamics Simulations | Journal of Chemical Theory and Computation

- https://github.com/softmaterialslab/nanoconfinement-md/tree/master/python/surrogate_samplesize

- Coordinate with Fanbo Sun who is leading the development of this surrogate and conducted the sample size study that I have shared in our meetings.

- Working on preparing the dataset for the follow-up study to JCTC

- literature review: if folks are interested, the special issue on machine learning for molecular simulation in JCTC has many interesting papers (including surrogates): Journal of Chemical Theory and Computation | Vol 19, No 14

Argonne

- Argonne’s funds have essentially finished

- Xiaodong Yu is moving to Stevens

- New compression study comparing methods that are error bounded or nott – their performance differs by a factor of 4-6

- Baixi gave an update presentation SSO: A Highly Scalable Second-order Optimization Framework ffor Deep Neural Networks via Communication Reduction

- Quantized Stochastic Gradient Descent QSGD Non error bounded

- Model accuracy versus compression tradeoff

- Unable to utilize error-feedback due to GPU memory being filled by large models and large batch size.

- Looked at different rounding methods

- Stochastic rounding preserves direction better as not so many zeros

- Revised our I/O paper i.e., SOLAR based on the reviews, submitting to ppopp’24 with new experiments and better writeup

Rutgers

- The surrogate survey paper is making good progress with DeepDriveMD other motifs.

- Andre Merzy is working on associated Miniapps (surrogates)

- Will work with MLCommons in October

Tennessee

- Piotr presented his groups work https://drive.google.com/file/d/1ep9zxdv25I3MJmPt5YcJi32SHu5BAF4J/view?usp=sharing

- MiniWeatherML running with MPI and with or without CUDA.

- No external dataset is required

- SABATH making good progress in collaboration with Virginia

- They are working on Cosmoflow

- Piotr noted that those sites that are continuing with the project will need to submit a project report very soon. Geoffrey shared his project report to allow a common story

8.9 - Meeting Notes 06-26-2023

Minutes of SBI-FAIR June 26, 2023, Meeting

**Present: **Geoffrey Fox, Gregor von Laszewski, Przemek Porebski, Kamil Iskra, Xiaodong Yu, Baixi Sun. Vikram Jadhao, Piotr Luszczek, Shantenu Jha, Margaret Lentz

Virginia

- This was presented by Geoffrey

- He described work on new surrogates, including LHC Calorimeter, Epidemiology, Extended virtual tissue, and Earthquake

- He described work on the repository and SABATH

- This involved two existing AI models CloudMask and OSMIBench

- Shantenu Jha asked about the number of inferences per second.

- From MLCommons Science Working minutes, we find for OSMIBench

- On Summit, with 6 GPUs per node, one uses 6 instances of TensorFlow server per node. One uses batch sizes like 250K with a goal of a billion inferences per second

Argonne

- Continue to work on Second-order Optimization Framework for Deep Neural Networks with Communication Reduction

- Baixi Sun presented the details

- He introduced quantization to lower precision QSGD which gives encouraging results, although In one case quantization method failed in the eigenvalue stage

- We removed Rick Stevens from the Google Group

- Geoffrey mentioned his ongoing work on improving shuffling using Arrow vector format; he will share the paper when ready

Indiana

- Vikram gave the presentation without slides

- Continuing study of needed training set for the Ions confinement surrogate Probing Accuracy-Speedup Tradeoff in Machine Learning Surrogates for Molecular Dynamics Simulations | Journal of Chemical Theory and Computation

- New dataset to explore soft labels reflecting computational uncertainty to reduce errors

Rutgers

- Shantenu presented

- Nice paper on surrogate classes with Wes Brewer, who works with Geoffrey on OSMIBench

- Mini-apps for each of the 6 motifs that need FAIR metadata

- 5 motifs use surrogates; one generates them

- He described an interesting workshop on molecular simulations

- He noted that Aurora training trillion parameter foundation model for science

- LLMs need 10 power 8 exaflops: Need to optimize!

- Vikram noted SIMULATION INTELLIGENCE: TOWARDS A NEW GENERATION OF SCIENTIFIC METHODS

Tennessee

- Piotr presented slides

- CosmoFlow on 8 GPUs is running well

- He introduced the MiniWeatherML mini-app

- CUDA-aware pointers must be explicitly specified in the FAIR schema

- Test in PETSc leaves threaded MPI in an invalid state

- Alternative MPIX query interface varies between MPI implementations

- GPU Direct copy support is optional

- SABATH system is moving ahead with a focus on adding MPI support

- Piotr is now the PI of this project at UTK. We removed Cade Brown, Jack Dongarra, and Deborah Penchof from the Google Group

8.10 - Meeting Notes 05-29-2023

Minutes of SBI-FAIR May 29, 2023, Meeting

Present: Geoffrey Fox, Xiaodong Yu, Baixi Sun. Piotr Luszczek,

Virginia

- Comment on Surrogates produced by generative methods versus those that map particular inputs to particular outputs. In examples like experimental physics apparatus simulations, you only need output and not input. Methods need to sample output data space correctly.

- Geoffrey also described earlier experiences using second-order methods and least squares/maximum likelihood optimizations for physics data analysis. One can use eigenvalue/vector decomposition or the Levenberg-Marquardt method.

Tennessee

- SABATH student continuing over summer

- New surrogate MiniWeatherML is not PyTorch Tensorflow from Oak Ridge

- “Hello World” for weather. https://github.com/mrnorman/miniWeatherML

Argonne

- Xiaodong summarized the situation, and Baixi gave a detailed presentation

- Working on reducing data size, but compression technology seems difficult

- The error-bounded approach doesn’t seem to work very well, and so Argonne are investigating other methods. There is currently no method that preserves good accuracy and gives significant reduction.

- Looking at the performance of first and second-order gradients

- What can you drop in second order method – lots of data are irrelevant but not what current lossy compression seems to be doing

- Model parallelism for calculating eigensystems and then Data parallelism

8.11 - Meeting Notes 04-03-2023

Minutes of SBI-FAIR April 3 2023, Meeting

Present: Geoffrey Fox, Gregor von Laszewski, Przemek Porebski, Piotr Luszczek, Kamil Iskra, Xiaodong Yu, Baixi Sun. Vikram Jadhao, Margaret Lentz (DOE),

Regrets: Shantenu Jha

**DOE **had no major announcements but reminded us of links